|

I am currently a Lead Researcher in the Imaging Algorithm Center of Vivo. Our group, as the core algorithm team, utilizes 3D, AIGC-based technologies to enhance the photography quality and user experience of smartphones. I do research in 3D computer vision and AI-generated content (AIGC), where my interests focus on neural rendering, 3D Gaussian Splatting (3DGS), 3D reconstruction and modeling, visual diffusion models. During 2021-2024, I was a Researcher in the Tencent AI Lab from Jun. 2021. Before that, I received my Ph.D. degree from the School of Computer Science of Northwestern Polytechnical University in 2021, I was supervised by Prof. Qing Wang. I was a visiting student at the Australian National University (ANU) between Jul. 2019 to Aug. 2020, which was supervised by Prof. Hongdong Li. I received the Outstanding Doctoral Dissertation Award Nominee from China Computer Federation (CCF) in 2021. I also won the 2021 ACM Xi'an Doctoral Dissertation Award and the 2023 NWPU Doctoral Dissertation Award. !!! Vivo (Xi'an & Hangzhou) is hiring mulitple Researchers and interns for projects on 3D reconstruction (e.g. NeRF, 3DGS, and NVS) and AIGC-based generation (including image or video diffusion models), please feel free to contact me via e-mail and WeChat. |

|

🎉🎉2025.06: GUS-IR accetped to TPAMI! |

|

4D Tencent Avatar: 4D Content Generation (全息表演捕捉及人物建模技术) |

|

Please find below a complete list of my publications with representative papers highlighted. The IEEE TPAMI, IJCV are top journals in the field of computer vision and computational photography. The CVPR is the premier conference in Computer Vision research community. |

|

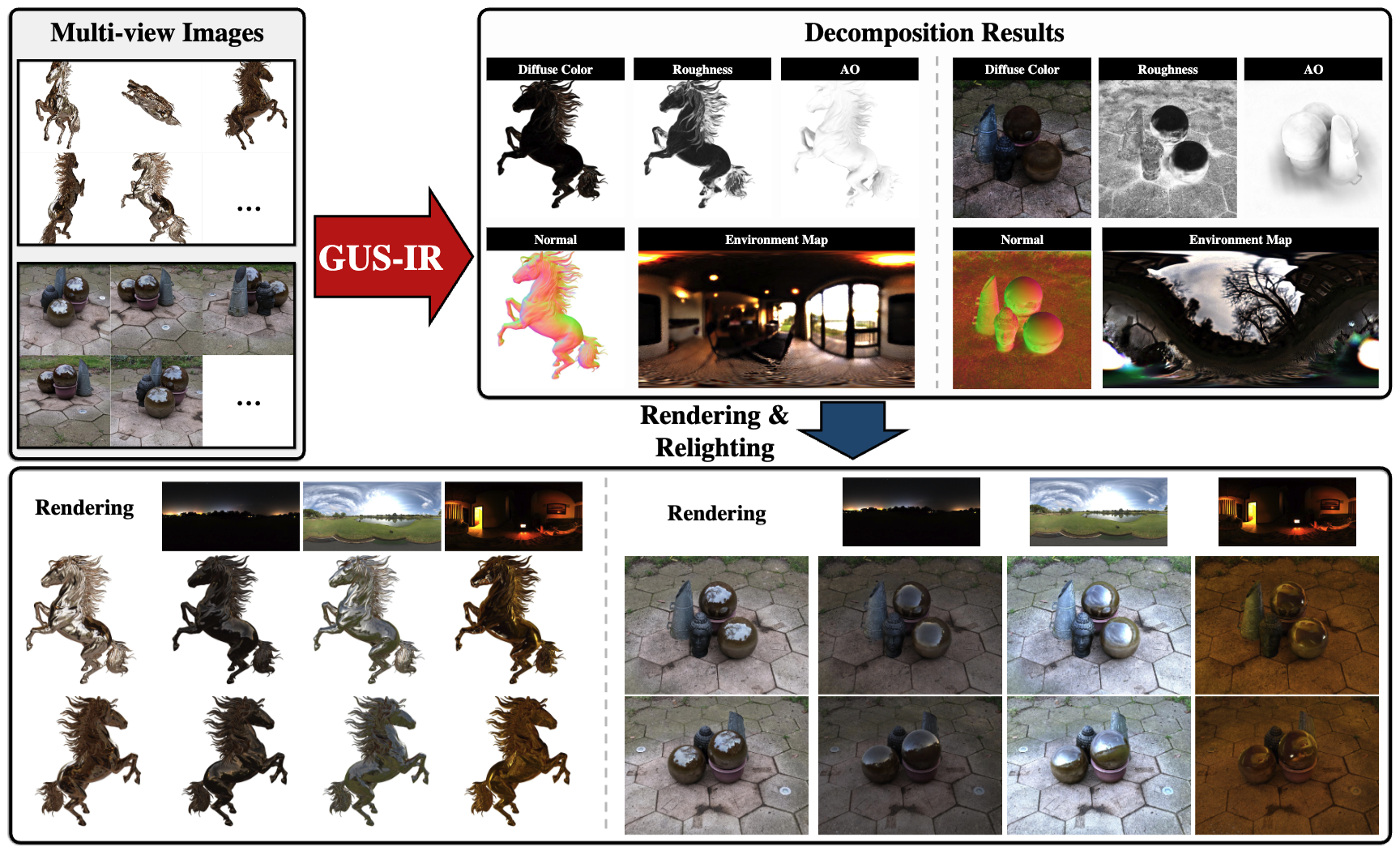

TPAMI 2025

|

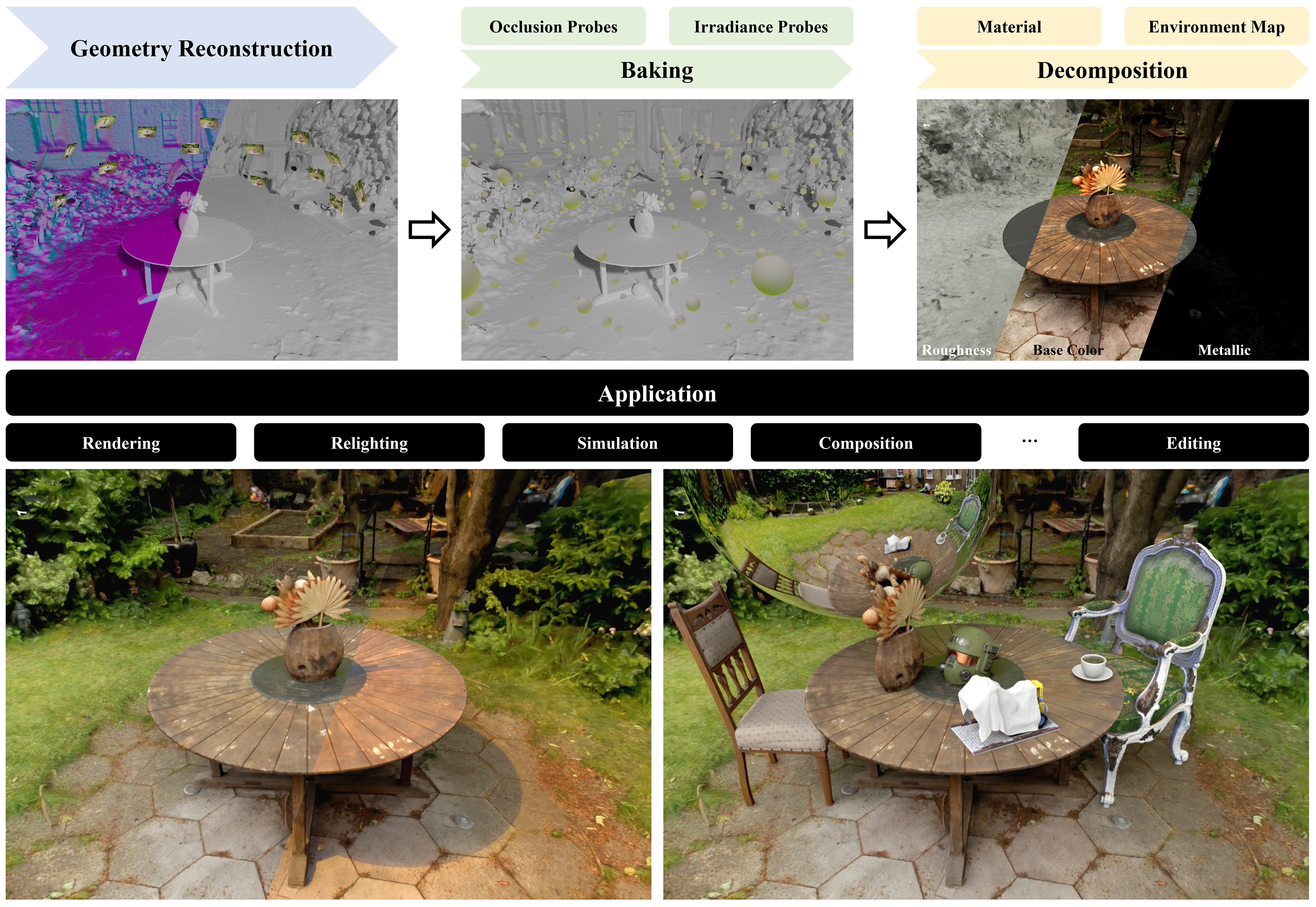

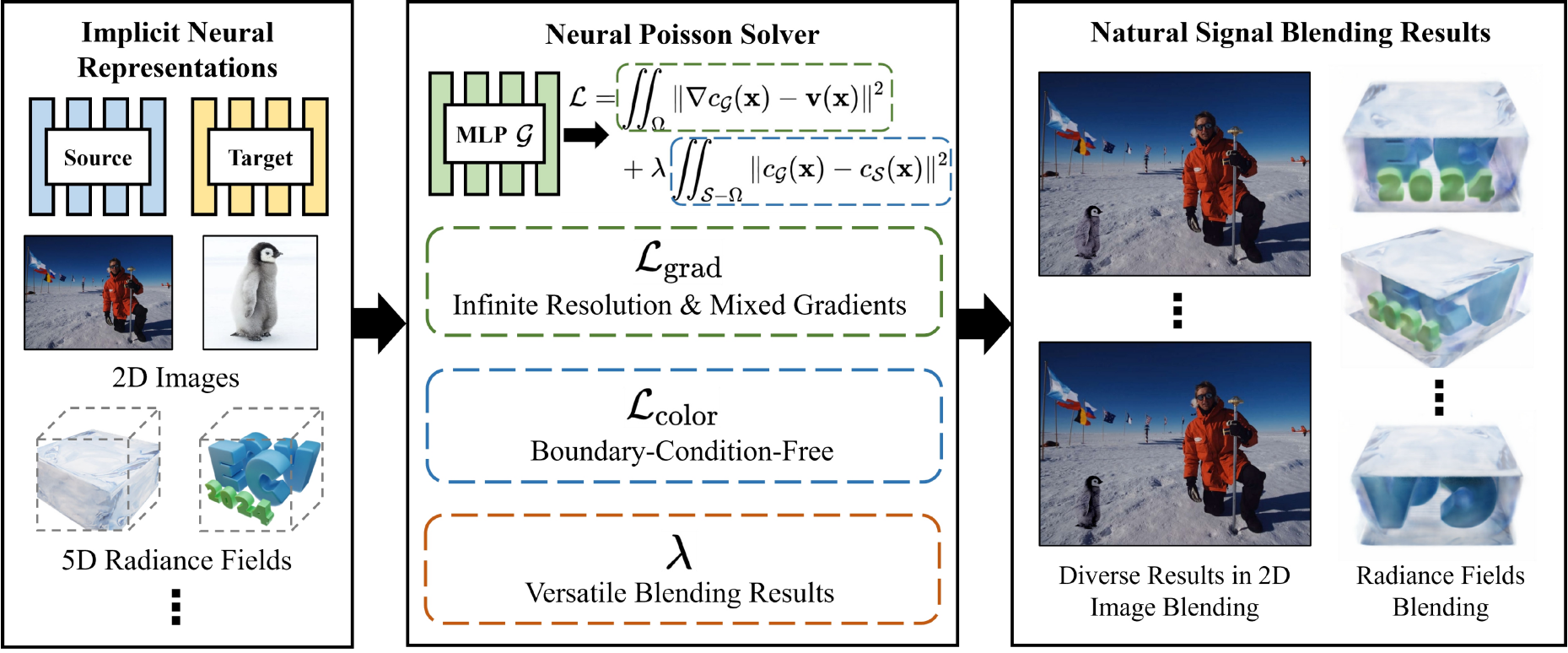

Zhihao Liang, Hongdong Li, Kui Jia, Kailing Guo, Qi Zhang TPAMI, 2025 Project Page / arXiv / Code In this paper, we present GUS-IR, a novel framework designed to address the inverse rendering problem for complicated scenes featuring rough and glossy surfaces. |

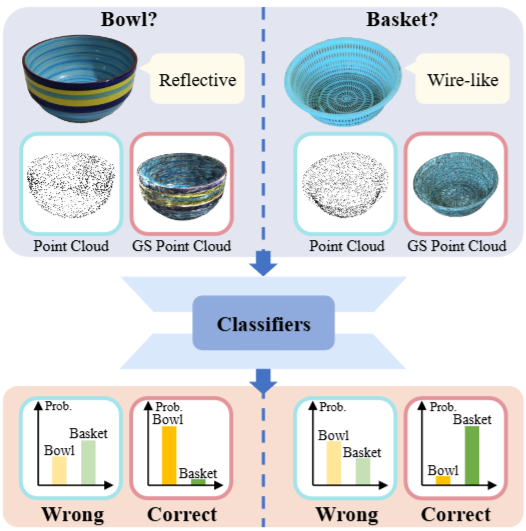

CVPR 2025

|

Ruiqi Zhang*, Hao Zhu*, Jingyi Zhao, Qi Zhang, Xun Cao, Zhan Ma CVPR, 2025 arXiv This paper proposes Gaussian Splatting (GS) point cloud-based 3D classification. This paper finds that the scale and rotation coefficients in the GS point cloud help characterize surface types. |

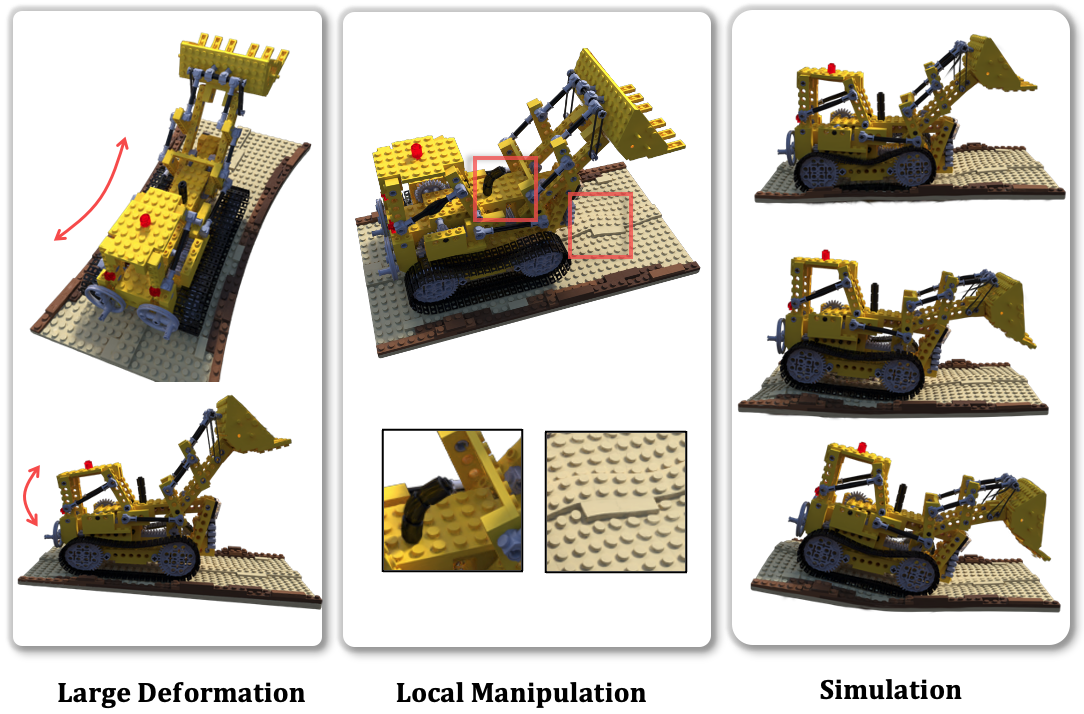

CVPR 2025

|

Xiangjun Gao, Xiaoyu Li, Yiyu Zhuang, Qi Zhang, Wenbo Hu, Chaopeng Zhang, Yao Yao, Ying Shan, Long Quan CVPR, 2025 Project Page / arXiv This approach reduces the need to design various algorithms for different types of Gaussian manipulation. By utilizing a triangle shape-aware Gaussian binding and adapting method, we can achieve 3DGS manipulation and preserve high-fidelity rendering after manipulation. |

CVPR 2025

|

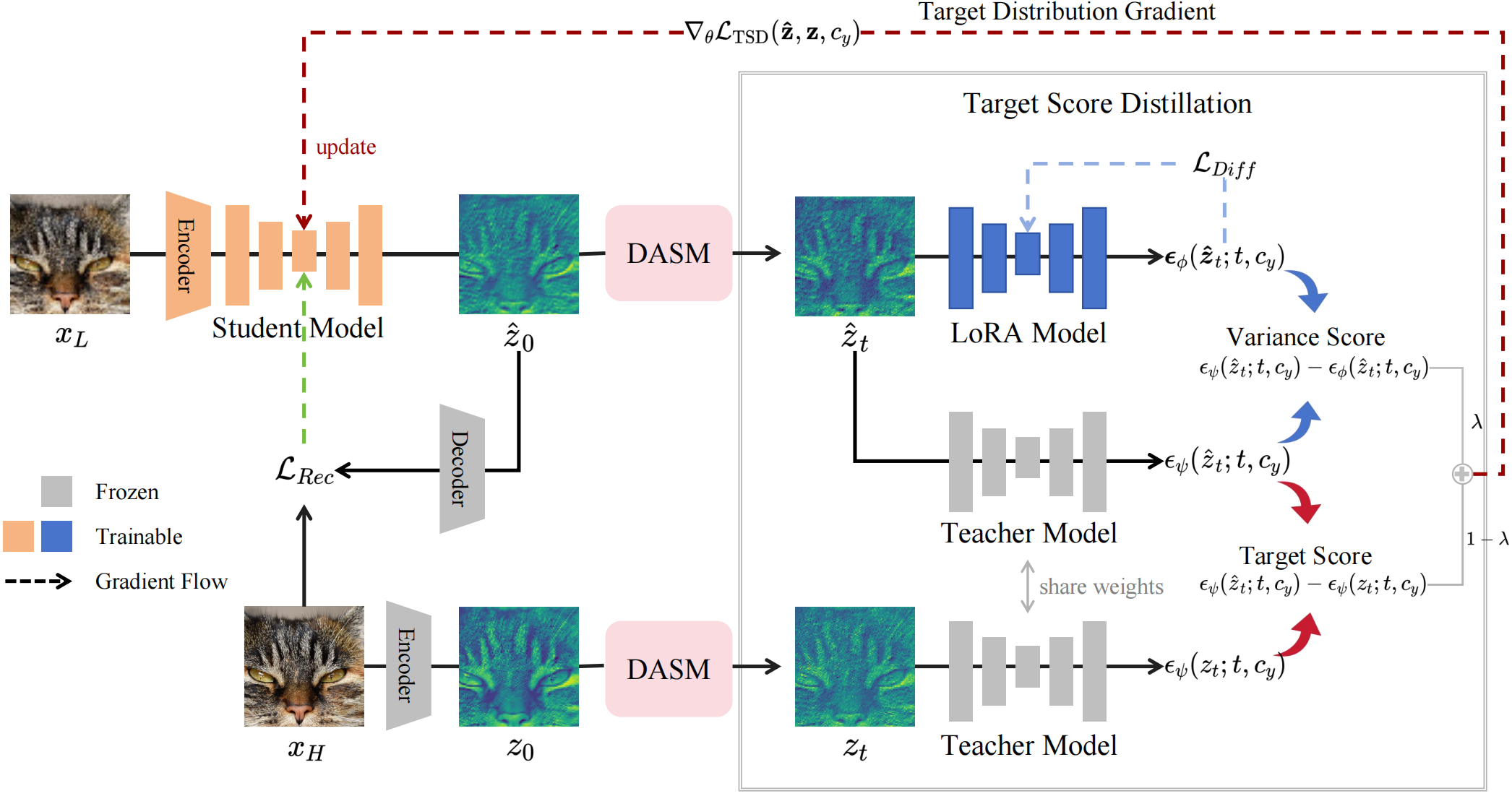

Linwei Dong*, Qingnan Fan*, Yihong Guo, Zhonghao Wang Qi Zhang, Jinwei Chen, Yawei Luo, Changqing Zou CVPR, 2025 Project Page / arXiv This paper proposes TSD-SR, a novel distillation framework specifically designed for real-world image super-resolution, aiming to construct an efficient and effective one-step model. |

TVCG 2025

|

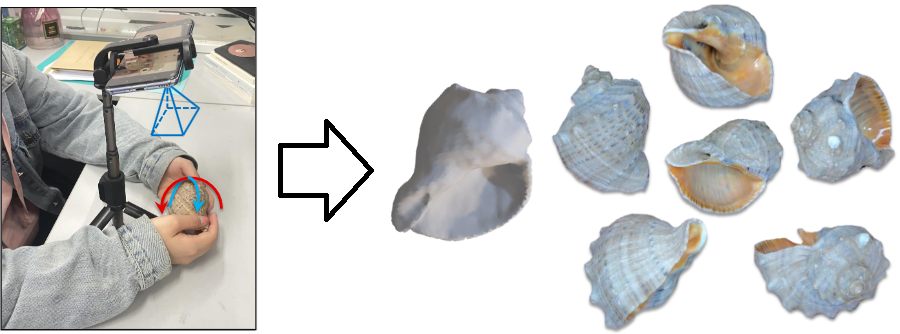

Xinxin Liu, Qi Zhang, Xin Huang, Ying Feng, Guoqing Zhou, Qing Wang IEEE Transactions on Visualization and Computer Graphics (TVCG), 2025 Project Page In this paper, we propose a novel neural representation-based framework to recover radiance fields of the two-hand-held object, named H2O-NeRF. |

arXiv 2025

|

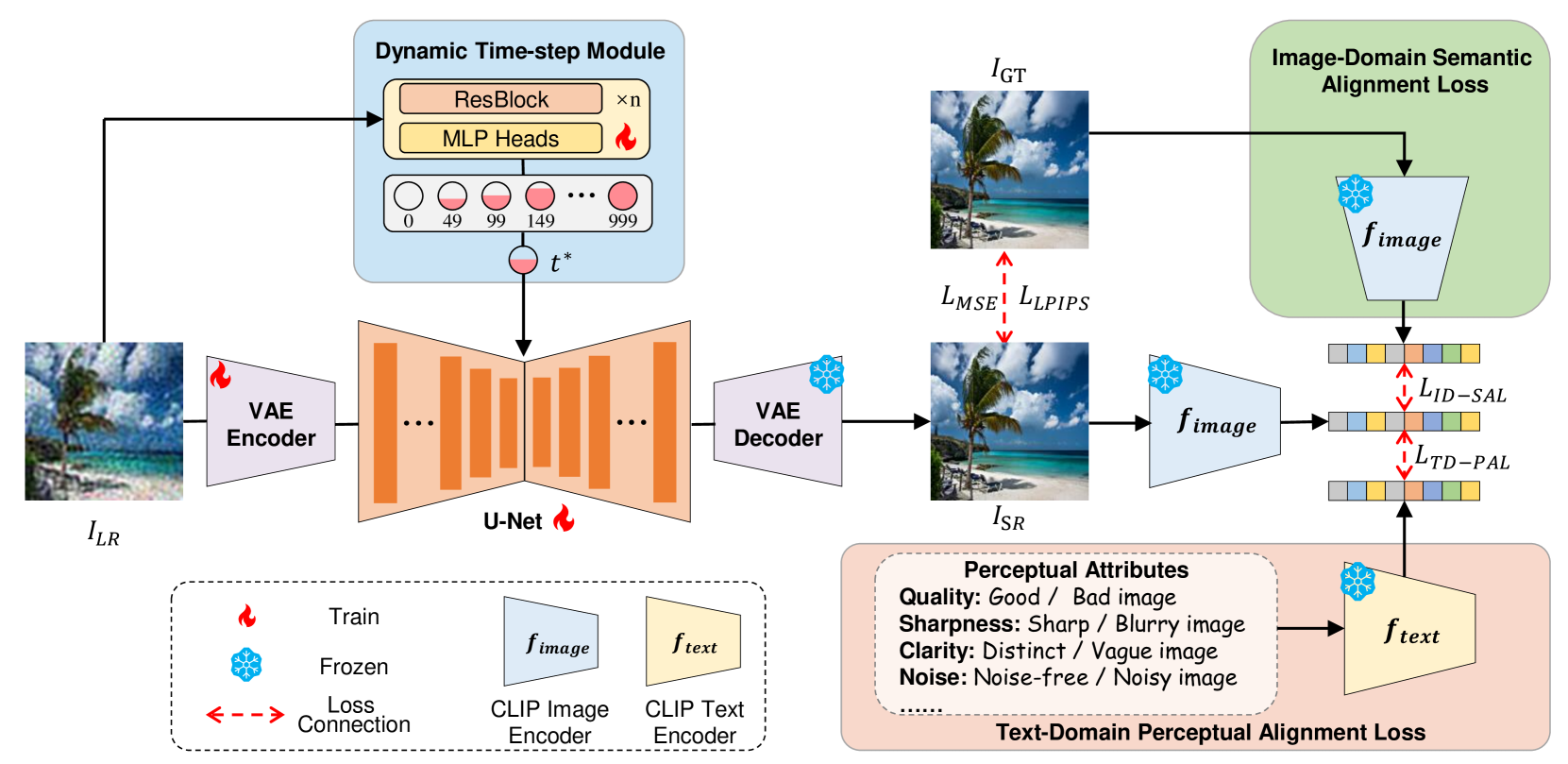

Jiangang Wang, Qingnan Fan, Qi Zhang, Haigen Liu, Yuhang Yu, Jinwei Chen, Wenqi Ren, arXiv, 2025 Project Page / arXiv / Code Hero-SR consists of two novel modules: the Dynamic Time-Step Module (DTSM), which adaptively selects optimal diffusion steps for flexibly meeting human perceptual standards, and the Open-World Multi-modality Supervision (OWMS), which integrates guidance from both image and text domains through CLIP to improve semantic consistency and perceptual naturalness. |

arXiv 2025

|

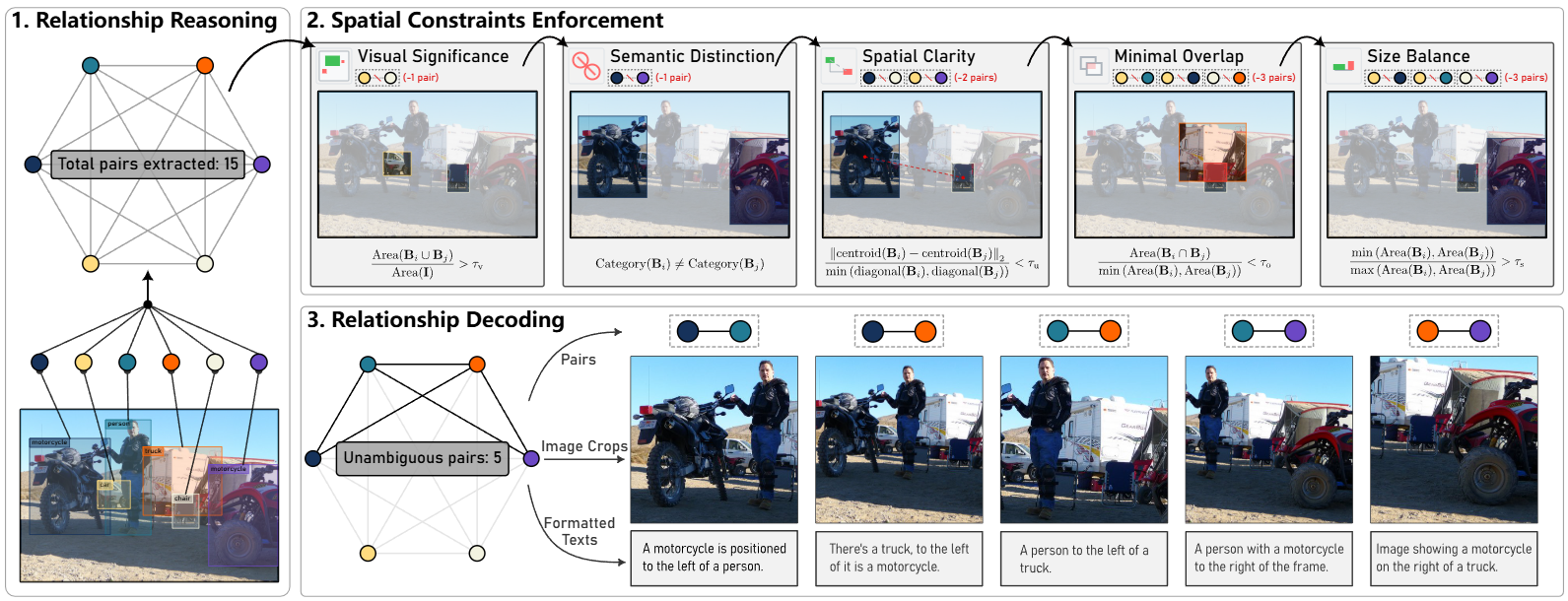

Gaoyang Zhang, Bingtao Fu, Qingnan Fan, Qi Zhang, Runxing Liu, Hong Gu, Huaqi Zhang, Xinguo Liu arXiv, 2025 Project Page / arXiv / Code CoMPaSS significantly enhances spatial understanding in text-to-image diffusion models while preserving their text-only input nature, requiring zero additional parameters and negligible computational overhead during both training and inference, and seamlessly integrating with any model architecture or dataset. |

SPL 2025

|

Zhihao Liang, Qi Zhang, Yirui Guan, Ying Feng, Kui Jia IEEE Signal Processing Letters, 2025 arXiv We present a novel three-stage Inverse Renderer with Probes (IR-Pro), which efficiently caches occlusion to handle indirect illumination. |

TPAMI 2024

|

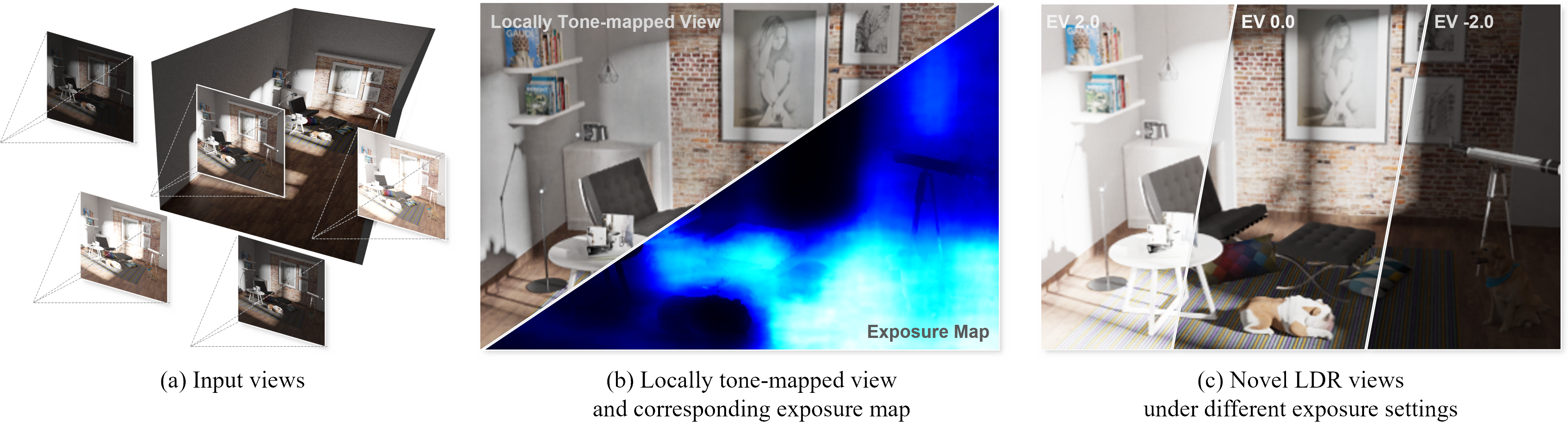

Xin Huang, Qi Zhang, Ying Feng, Hongdong Li, Qing Wang IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2024 Project Page / arXiv Our LTM-NeRF, which incorporates the Camera Response Function (CRF) module and the Neural Exposure Field, collaborates seamlessly with NeRF. |

|

ECCV 2024 (Oral)

|

Zhihao Liang, Qi Zhang, Wenbo Hu, Lei Zhu, Ying Feng, Kui Jia ECCV, 2024 Project Page / arXiv / Code / Viewer In this paper, we derive an analytical solution to address the aliasing caused by discrete sampling in 3DGS. |

|

ECCV 2024

|

Delong Wu, Hao Zhu Qi Zhang, You Li, Xun Cao, Zhan Ma ECCV, 2024 Project Page / arXiv / Code In this paper, we derive an analytical solution to address the aliasing caused by discrete sampling in 3DGS. |

|

ECCV 2024

|

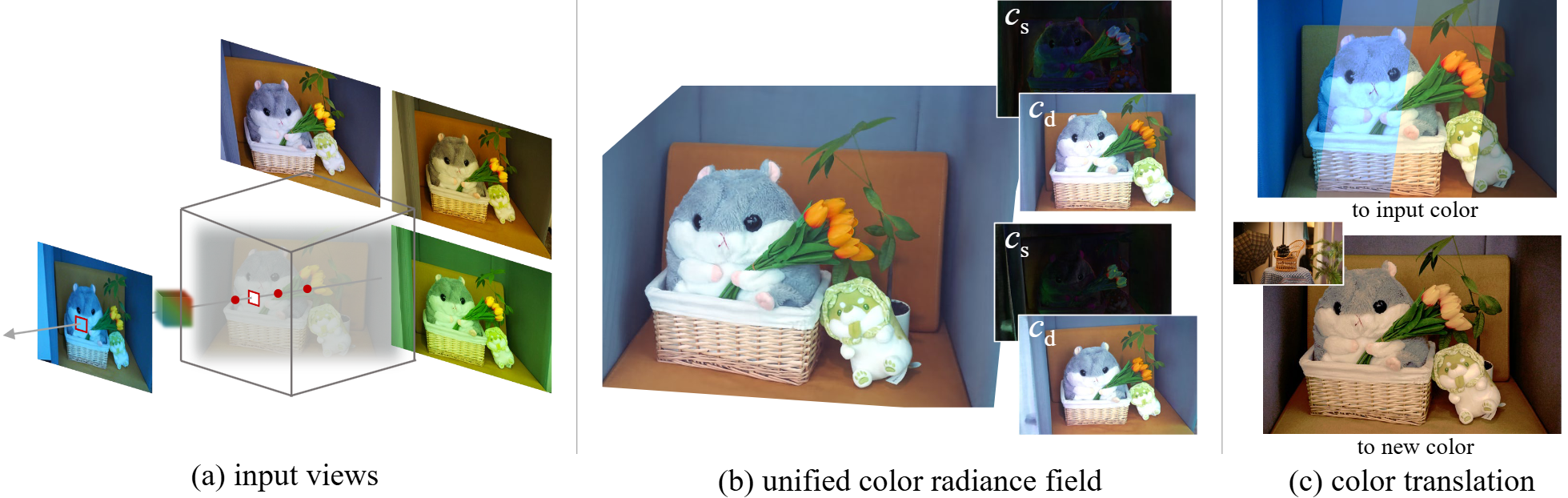

Qi Zhang, Ying Feng, Hongdong Li, ECCV, 2024 Project Page / arXiv / Code In this paper, we address this problem by proposing a novel color correction module that simulates the physical color processing in cameras to be embedded in NeRF, enabling the unified color NeRF reconstruction. |

|

ECCV 2024

|

Yuxiao He, Yiyu Zhuang, Yanwen Wang, Yao Yao, Siyu Zhu, Xiaoyu Li, Qi Zhang, Xun Cao, Hao Zhu ECCV, 2024 Project Page / arXiv / Code / Data Our model is represented by a neural radiance field with hexlanes, conditioned on a generative neural texture and a parametric 3D mesh model. |

|

arXiv 2024

|

Xiaoyu Li*, Qi Zhang*, Di Kang, Weihao Cheng, Yiming Gao, Jingbo Zhang, Zhihao Liang, Jing Liao, Yanpei Cao, Ying Shan arXiv, 2024 Project Page / arXiv / Code In this survey, we aim to introduce the fundamental methodologies of 3D generation methods and establish a structured roadmap, encompassing 3D representation, generation methods, datasets, and corresponding applications. |

Knowledge-Based Systems 2025

|

Yujiao Jiang, Qingmin Liao, Xiaoyu Li, Li Ma, Qi Zhang, Chaopeng Zhang, Zongqing Lu, Ying Shan Knowledge-Based Systems, 2025 Project Page / arXiv / Code In this paper, we introduce a texture-consistent back view synthesis module that could transfer the reference image content to the back view through depth and text-guided attention injection with the help of stable diffusion model. |

|

CVPR 2024

|

Zhihao Liang*, Qi Zhang*, Ying Feng, Ying Shan, Kui Jia CVPR, 2024 Project Page / arXiv / Code We propose GS-IR, a novel inverse rendering approach based on 3D Gaussian Splatting (GS) that leverages forward mapping volume rendering to achieve photorealistic novel view synthesis and relighting results. |

CVPR 2024

|

Xin Huang*, Ruizhi Shao*, Qi Zhang, Hongwen Zhang, Ying Feng, Yebin Liu, Qing Wang CVPR, 2024 Project Page / arXiv / Code We propose HumanNorm, a novel approach for high-quality and realistic 3D human generation by learning the normal diffusion model including a normal-adapted diffusion model and a normal-aligned diffusion model. |

|

CVPR 2024

|

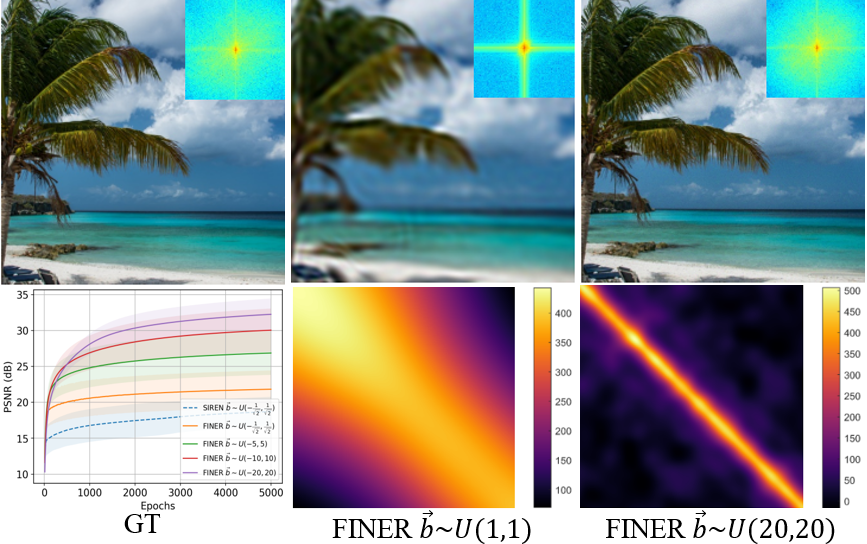

Zhen Liu*, Hao Zhu*, Qi Zhang, Jingde Fu, Weibing Deng Zhan Ma, Yanwen Guo Xun Cao CVPR, 2024 Project Page / PDF / Code We have identified that this frequency-related problem can be greatly alleviated by introducing variable-periodic activation functions, for which we propose FINER. |

CVPR 2024

|

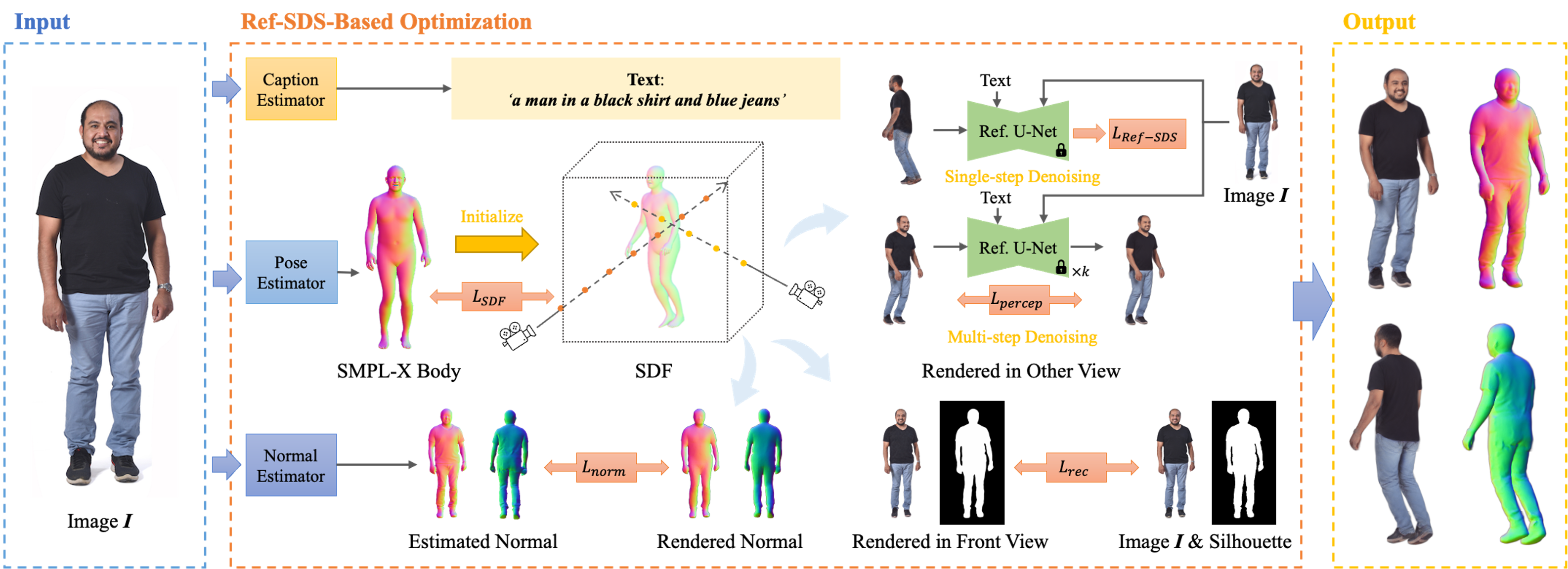

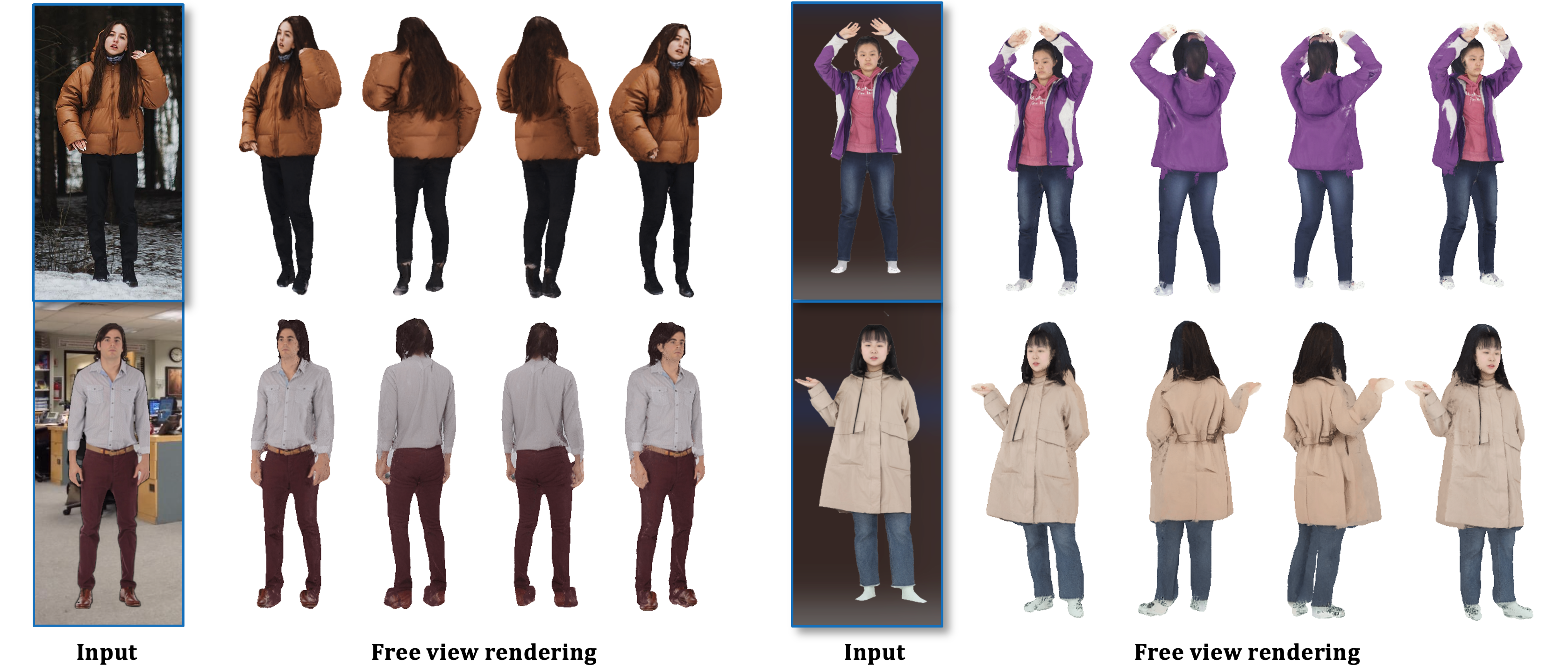

Jingbo Zhang, Xiaoyu Li, Qi Zhang, Yanpei Cao Ying Shan, Jing Liao CVPR, 2024 extended HumanRef-GS to TCSVT, 2025 Project Page / arXiv / Code HumanRef, a reference-guided 3D human generation framework, is capable of generating 3D clothed human with realistic, view-consistent texture and geometry from a single image input with the help of stable diffusion model. |

CVPR 2024

|

Xiangjun Gao, Xiaoyu Li, Chaopeng Zhang, Qi Zhang, Yanpei Cao, Ying Shan, Long Quan CVPR, 2024 Project Page / arXiv / Code In this paper, we introduce a texture-consistent back view synthesis module that could transfer the reference image content to the back view through depth and text-guided attention injection with the help of stable diffusion model. |

|

AAAI 2024

|

Yiyu Zhuang*, Qi Zhang*, Xuan Wang, Hao Zhu, Ying Feng, Xiaoyu Li, Ying Shan, Xun Cao AAAI, 2024 Project Page / arXiv / Code We propose a fully differentiable framework named neural ambient illumination (NeAI) that uses Neural Radiance Fields (NeRF) as a lighting model to handle complex lighting in a physically based way. |

|

TPAMI 2024

|

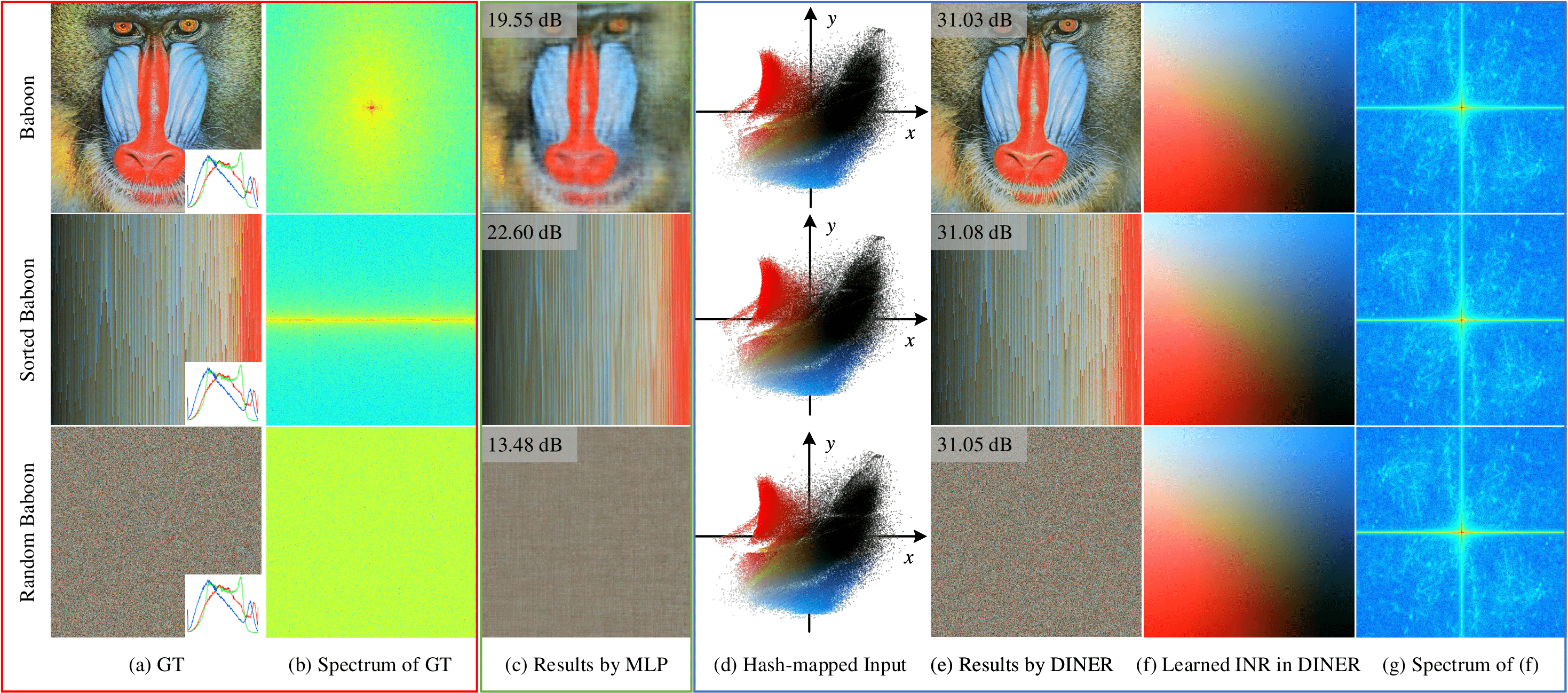

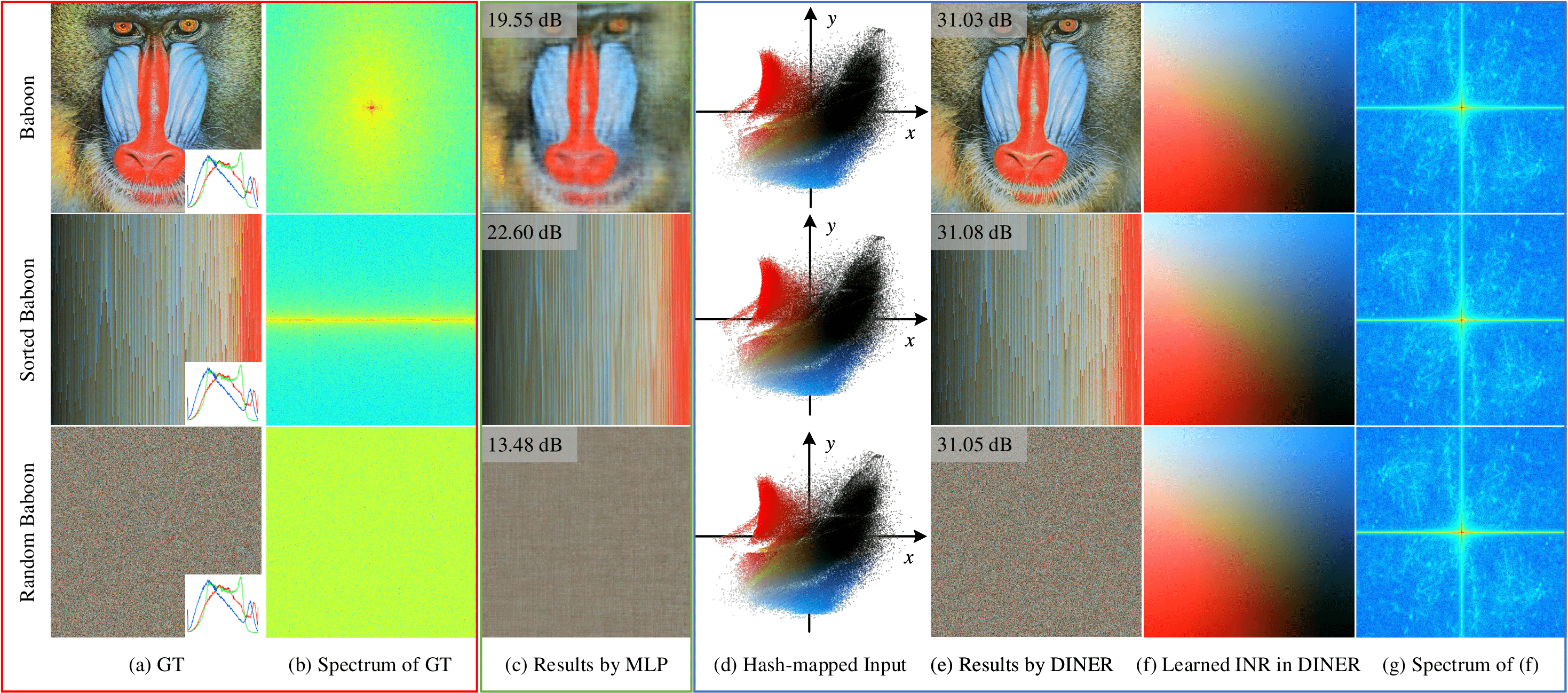

Hao Zhu*, Shaowen Xie*, Zhen Liu*, Fengyi Liu Qi Zhang, You Zhou, Yi Lin, Zhan Ma, Xun Cao, IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2024 Project Page / arXiv / Code In this paper, we find that such a frequency-related problem could be largely solved by re-arranging the coordinates of the input signal, for which we propose the disorder-invariant implicit neural representation (DINER) by augmenting a hash-table to a traditional INR backbone. |

|

SIGGRAPH Asia 2023

|

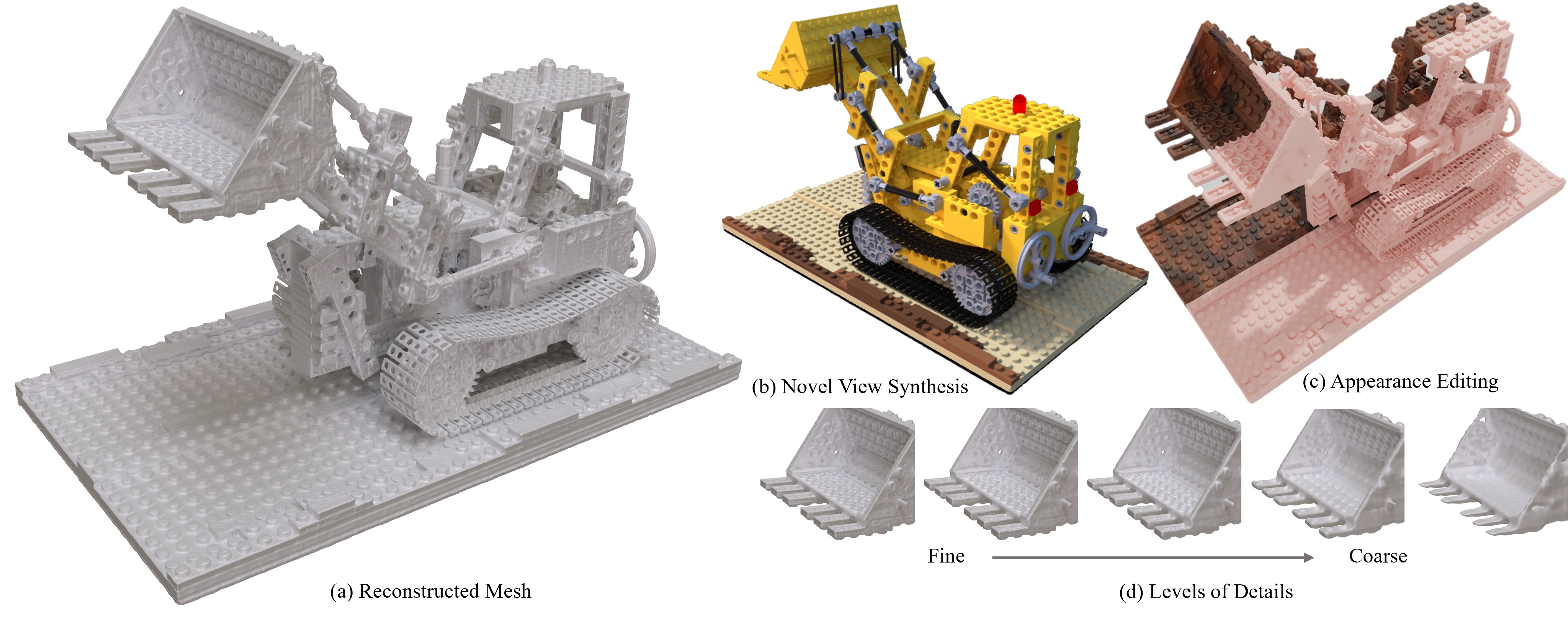

Yiyu Zhuang*, Qi Zhang*, Ying Feng, Hao Zhu, Yao Yao, Xiaoyu Li, Yanpei Cao, Ying Shan, Xun Cao SIGGRAPH Asia, 2023 Project Page / arXiv / Code Our method, called LoD-NeuS, adaptively encodes Level of Detail (LoD) features derived from the multi-scale and multi-convoluted tri-plane representation. By optimizing a neural Signal Distance Field (SDF), our method is capable of reconstructing high-fidelity geometry |

|

IJCV 2023

|

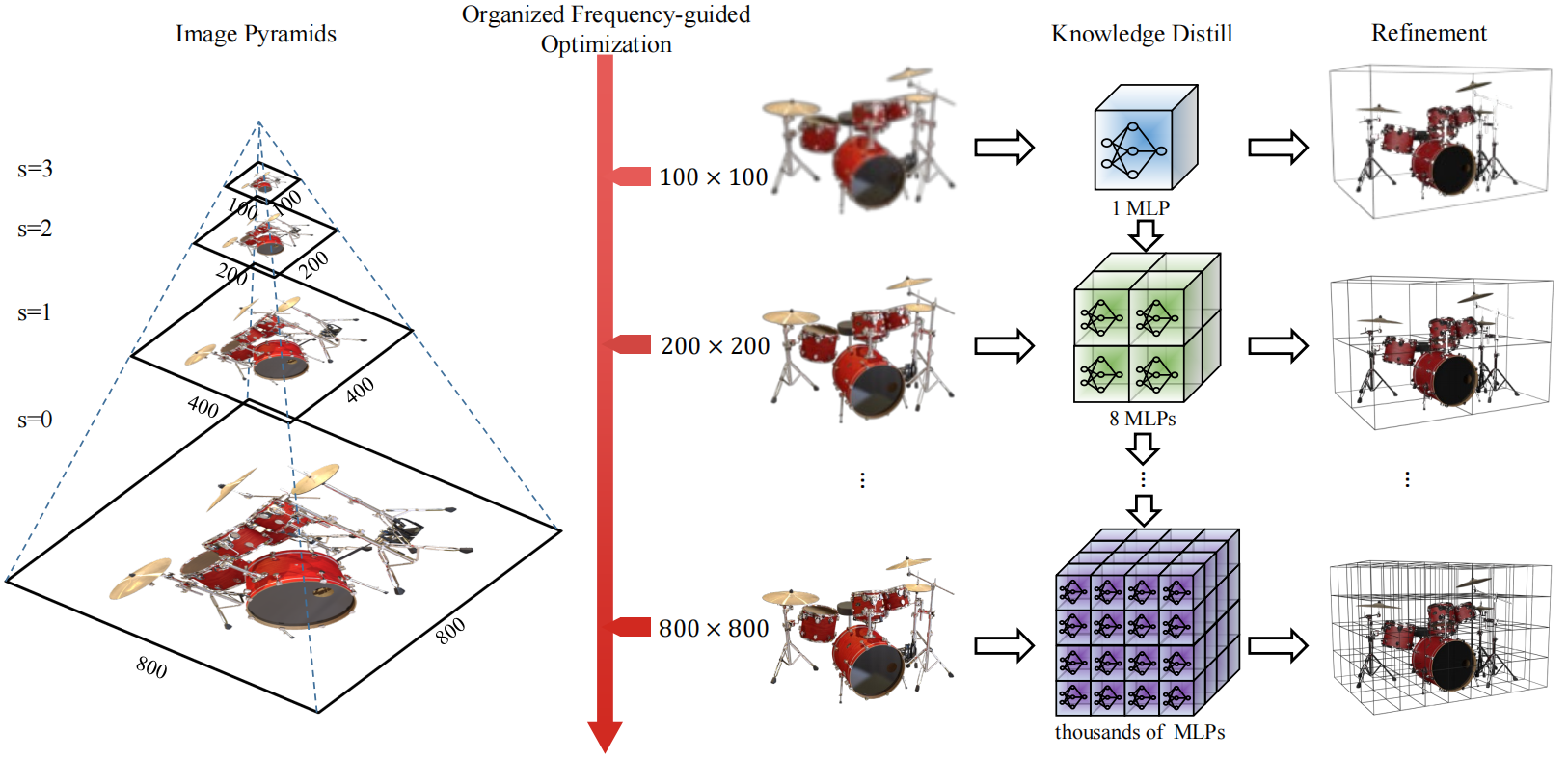

Junyu Zhu*, Hao Zhu*, Qi Zhang, Fang Zhu Zhan Ma, Xun Cao International Journal of Computer Vision (IJCV), 2023 Project Page / PDF / Code In this paper, we propose the Pyramid NeRF, which guides the NeRF training in a 'low-frequency first, high-frequency second' style using the image pyramids and could improve the training and inference speed at 15x and 805x, respectively. |

|

CVPR 2023

CVPR 2023

|

Qi Zhang, Hongdong Li, Qing Wang IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023 Project Page / arXiv We propose a new content-aware optimization framework to preserve both local conformal shape (e.g. face or salient regions) and global linear structures (straight lines). |

|

CVPR 2023

|

Xin Huang, Qi Zhang, Ying Feng, Hongdong Li, Qing Wang IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023 Project Page / arXiv / Code this paper proposes a novel implicit camera model which represents the physical imaging process of a camera as a deep neural network. We demonstrate the power of this new implicit camera model on two inverse imaging tasks: i) generating all-in-focus photos, and ii) HDR imaging. |

|

CVPR 2023

|

Xin Huang, Qi Zhang, Ying Feng, Xiaoyu Li, Xuan Wang, Qing Wang IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023 Project Page / arXiv / Code We propose LIRF (Local Implicit Ray Function), a generalizable neural rendering approach for novel view rendering. Given 3D positions within conical frustums, LIRF takes 3D coordinates and the features of conical frustums as inputs and predicts a local volumetric radiance field. |

|

CVPR 2023 (Highlight)

|

Shaowen Xie*, Hao Zhu*, Zhen Liu*, Qi Zhang, You Zhou, Xun Cao, Zhan Ma IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023 Project Page / arXiv / Code In this paper, we find that such a frequency-related problem could be largely solved by re-arranging the coordinates of the input signal, for which we propose the disorder-invariant implicit neural representation (DINER) by augmenting a hash-table to a traditional INR backbone. |

|

CVPR 2023

|

Yue Chen Xingyu Chen, Xuan Wang, Qi Zhang, Yu Guo, Ying Shan, Fei Wang IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023 Project Page / arXiv / Code We propose L2G-NeRF, a Local-to-Global registration method for bundle-adjusting Neural Radiance Fields, including the pixel-wise local alignment and the frame-wise global alignment. |

|

CVPR 2023

|

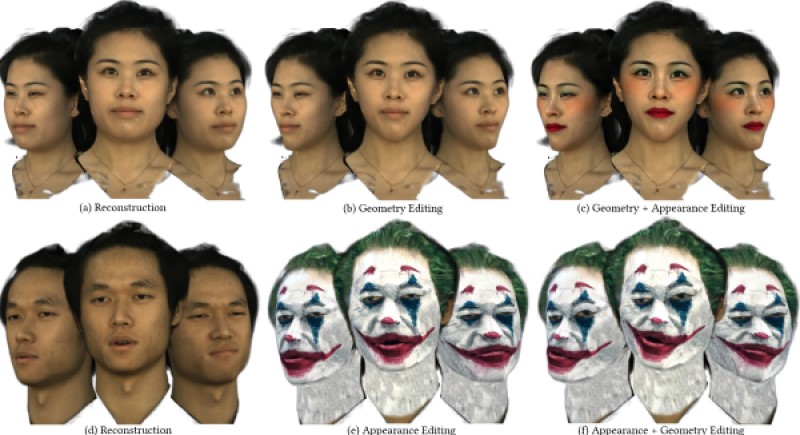

Yue Chen, Xuan Wang*, Xingyu Chen, Qi Zhang, Xiaoyu Li, Yu Guo, Jue Wang, Fei Wang IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023 Project Page / arXiv / Code / Video We propose the UV Volumes, a new approach that can achieve real-time rendering, and editable NeRF, decomposing a dynamic human into 3D UV Volumes and a 2D appearance texture. |

|

CVPR 2023

|

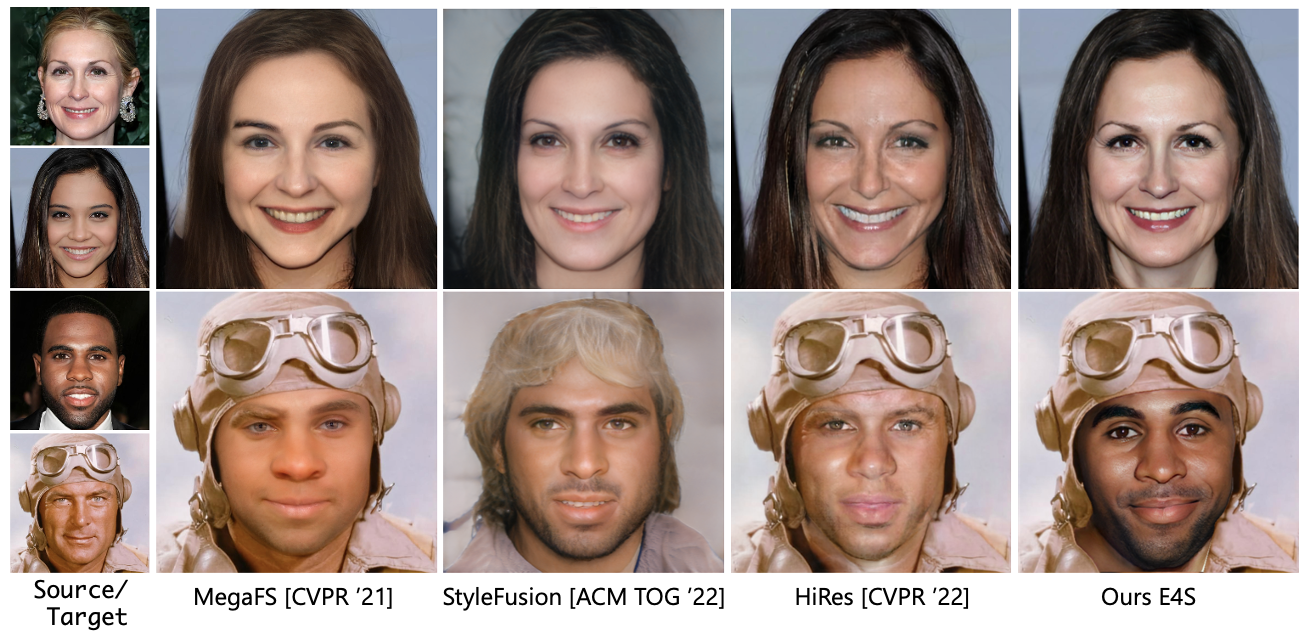

Zhian Liu*, Maomao Li*, Yong Zhang*, Cairong Wang, Qi Zhang, Jue Wang, Yongwei Nie IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2023 Project Page / arXiv / Code We present a novel paradigm for high-fidelity face swapping that faithfully preserves the desired subtle geometry and texture details. |

|

SIGGRAPH 2022

|

Li Ma, Xiaoyu Li, Jing Liao, Xuan Wang, Qi Zhang, Jue Wang, Pedro V. Sander ACM Transactions on Graphics, 2022 Project Page / arXiv / Code We try to introduce explicit parameters into implicit dynamic NeRF representations to achieve editing of 3D human heads. |

|

arXiv 2022

|

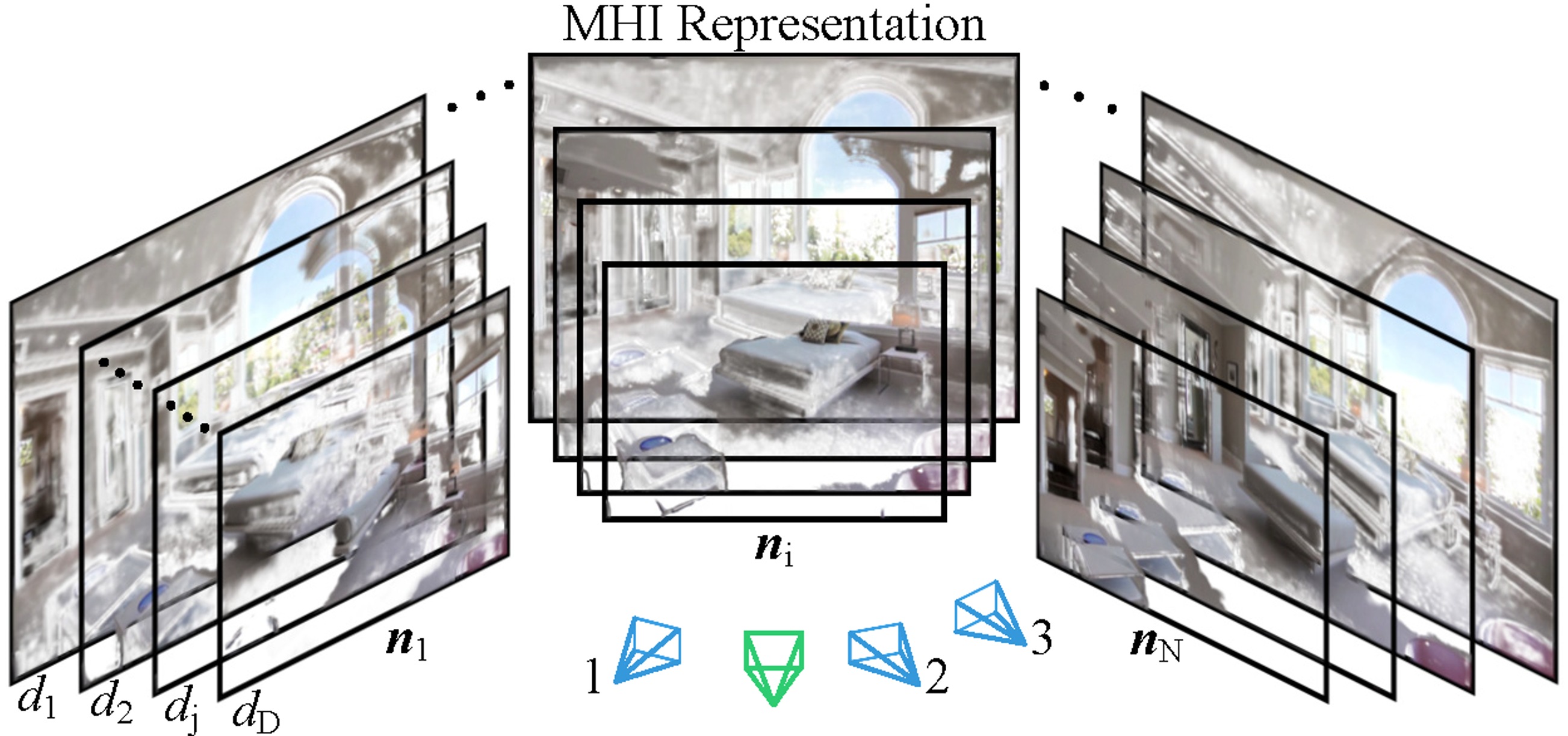

Qi Zhang*, Xin Huang*, Ying Feng,, Xue Wang, Hongdong Li, Qing Wang arXiv, 2022 arXiv We propose a novel multiple homography image (MHI) representation, comprising of a set of scene planes with fixed normals and distances, for view synthesis from stereo images. |

|

CVPR 2022

|

Xin Huang, Qi Zhang, Ying Feng, Hongdong Li, Xuan Wang, Qing Wang IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022 Project Page / arXiv / Code / Dataset / video We present High Dynamic Range Neural Radiance Fields (HDR-NeRF) to recover an HDR radiance field from a set of low dynamic range (LDR) views with different exposures. |

|

CVPR 2022

|

Xingyu Chen, Qi Zhang, Xiaoyu Li, Yue Chen, Ying Feng, Xuan Wang, Jue Wang IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022 Project Page / arXiv This paper studies the problem of hallucinated NeRF: i.e. recovering a realistic NeRF at a different time of day from a group of tourism images. |

|

CVPR 2022

|

Li Ma, Xiaoyu Li, Jing Liao, Qi Zhang, Xuan Wang, Jue Wang, Pedro V. Sander IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022 Project Page / arXiv In this paper, we propose Deblur-NeRF, the first method that can recover a sharp NeRF from blurry input. A novel Deformable Sparse Kernel (DSK) module is presented for both camera motion blur and defocus blur. |

|

CVPR 2022

|

Jingxiang Sun, Xuan Wang, Yong Zhang, Xiaoyu Li, Qi Zhang, Yebin Liu, Jue Wang IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022 Project Page / arXiv A 3D-aware generator (FENeRF) is prorposed to produce view-consistent and locally-editable portrait images. |

|

TPAMI 2022

|

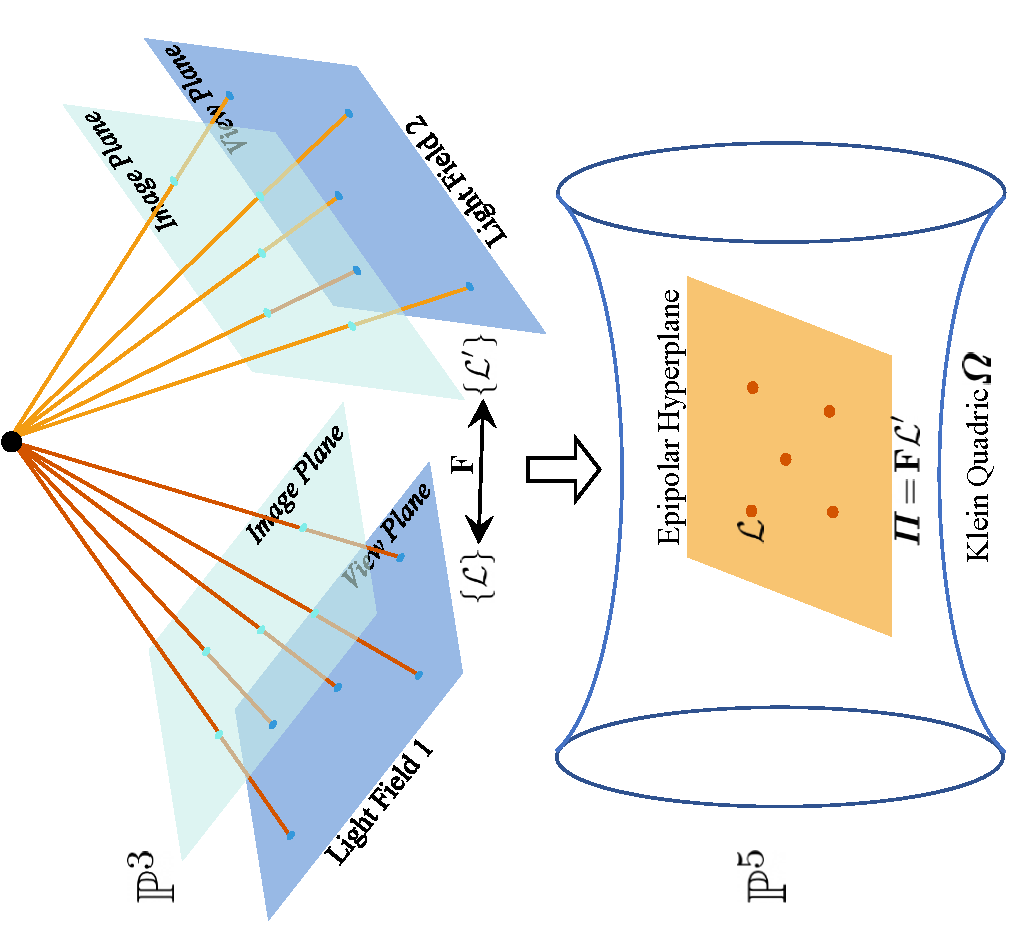

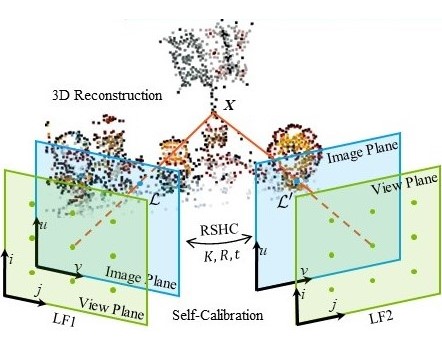

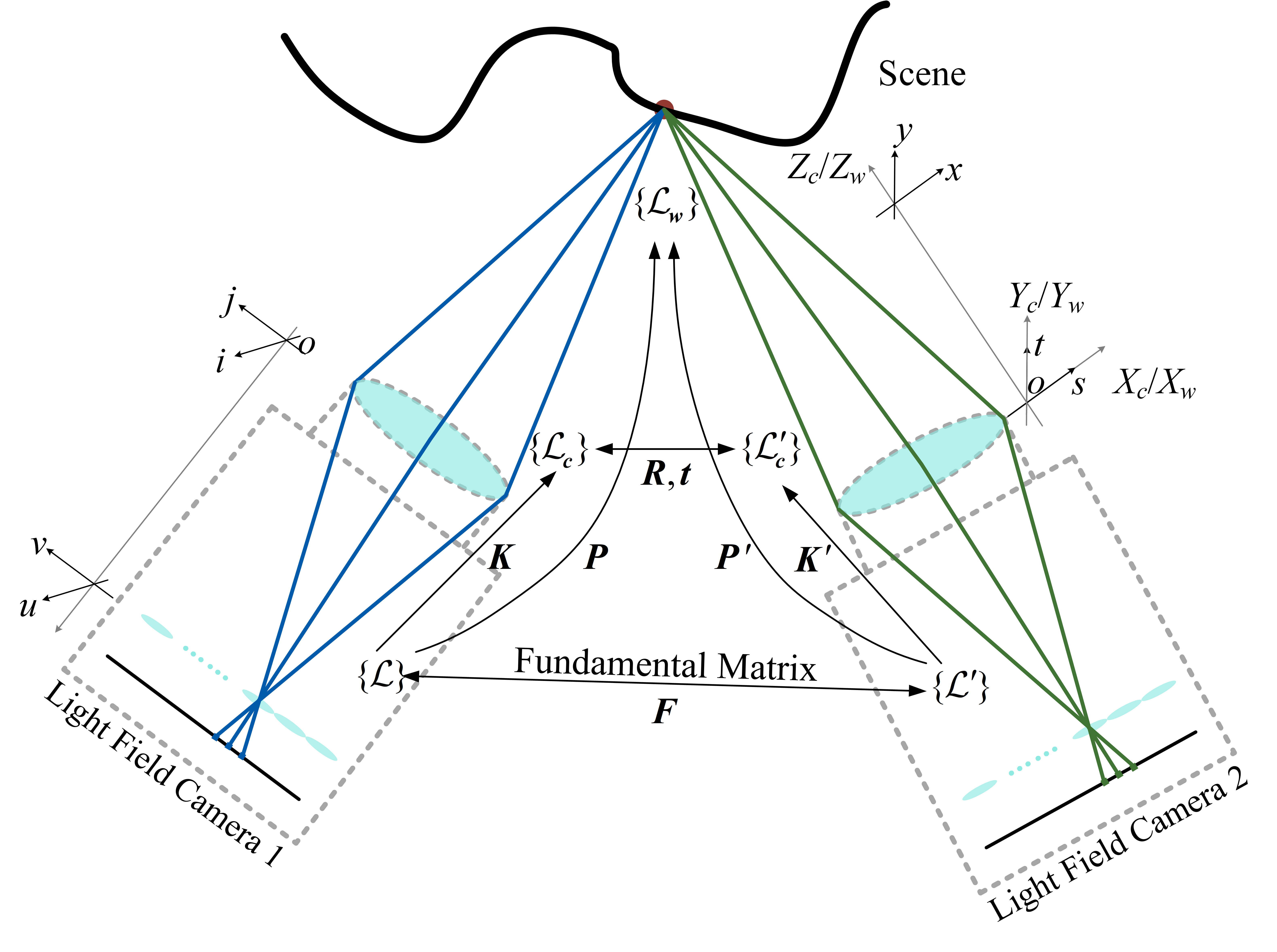

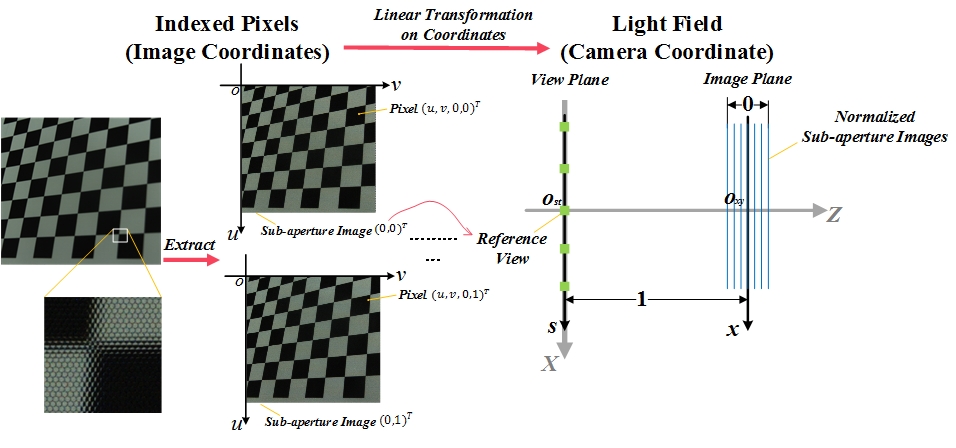

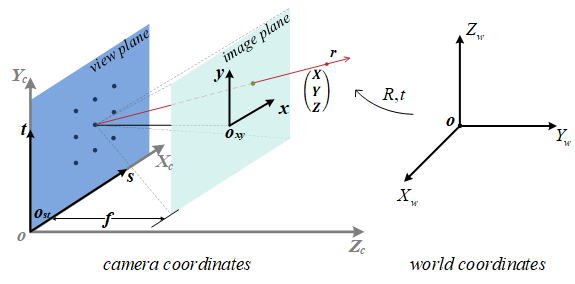

Qi Zhang, Qing Wang, Hongdong Li, Jingyi Yu IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2022 PDF / bibtex This paper fills in this gap by developing a novel ray-space epipolar geometry which intrinsically encapsulates the complete projective relationship between two light fields. Ray-space fundamental matrix and its properties are then derived to constrain ray-ray correspondences for general and special motions. |

|

IJCV 2021

IJCV 2021

|

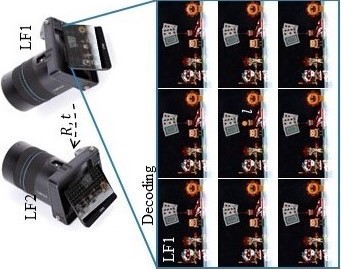

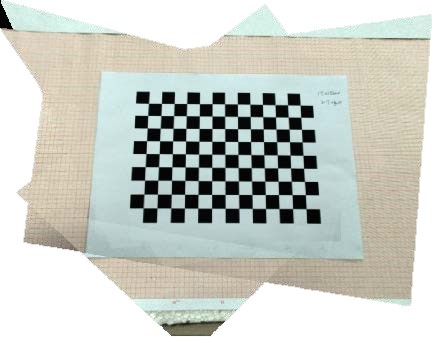

Qi Zhang, Hongdong Li, Xue Wang, Qing Wang International Journal of Computer Vision (IJCV), 2021 PDF / bibtex This paper is concerned with the problem of multi-view 3D reconstruction with an un-calibrated micro-lens array based light field camera.. |

|

TCI 2029

|

Hao Zhu, Xiaoming Sun, Qi Zhang, Qing Wang, Antonio Robles-Kelly, Hongdong Li, Shaodi You IEEE Transactions on Computational Imaging, 2019 PDF / bibtex Our method employs the structure delivered by the four-dimensional light field over multiple views making use of superpixels for a full view optical flow estiamtion. |

|

CVPR 2019

CVPR 2019

|

Qi Zhang, Jinbo Ling, Qing Wang, Jingyi Yu IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019 PDF / Supp / bibtex In the paper, we propose a novel ray-space projection model to transform sets of rays captured by multiple light field cameras in term of the Plucker coordinates. |

|

TIP 2019

|

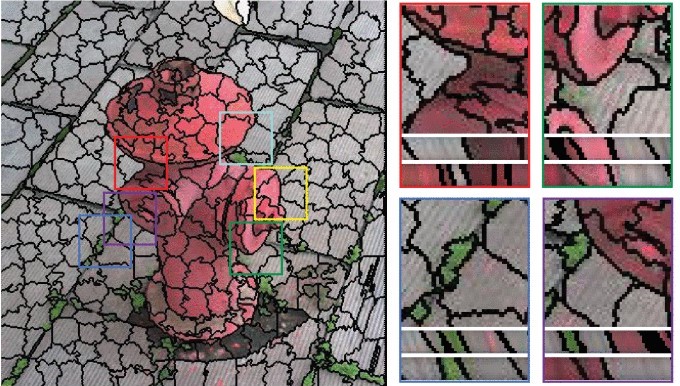

Hao Zhu, Qi Zhang, Qing Wang, Hongdong Li IEEE Transactions on Image Processing (TIP), 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2017 PDF / bibtex The light field superpixel (LFSP) is first defined mathematically and then a refocus-invariant metric named LFSP self-similarity is proposed to evaluate the segmentation performance. |

|

TPAMI 2019

TPAMI 2019

|

Qi Zhang, Chunping Zhang, Jinbo Ling, Qing Wang, Jingyi Yu IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2019 arXiv / PDF / Code / bibtex The MPC model can generally parameterize light field in different imaging formations, including conventional and focused light field cameras. |

|

Qi Zhang, Qing Wang. Common self-polar triangle of separate circles for light

field

camera calibration[J]. Xibei Gongye Daxue Xuebao/Journal of Northwestern Polytechnical

University,

2021. |

|

This template is a modification to Jon Barron's website. Feel free to clone it for your own use while attributing the original author Jon Barron. |